LF’s Project Alvarium: Ensuring Trusted IoT Data at the Edge with DLT

May 24, 2023

Supported by Dell, IOTA, Intel, etc., this open-source project assigns trust scores to sensor data, using distributed ledger as immutable storage.

Background: the issue of trust

As the number and variety of IoT devices grows exponentially, so does the amount of data produced and shared between different systems/organizations. However, the information gathered may be inaccurate, incomplete, outdated, tampered with, or maliciously manipulated. E.g., AI tools can be used to automatically generate fake data that looks too real for humans to tell the difference. So, how can we ensure that the information we use is valid and reliable?

Currently, the answer to this question involves using a zero-trust approach, where all data is considered untrustworthy until proven otherwise. While this option works for security, the approach can be difficult for scaling IoT systems having thousands of devices.

Understanding the problem, back in 2019, Steve Todd of Dell was actively exploring the ideas of applying trust insertion technologies to IoT scenarios, looking for ways to ensure data confidence at the edge. Eventually, the experiments within Dell led to a concept of data confidence fabric (DCF)—an architecture that could help to measure how trustworthy sensor data is as it moves from devices to apps.

In August 2019, the first DCF prototype was written by using the Go language, EdgeX Foundry, Dell Boomi, VMware Blockchain, Lightwave, etc. In October 2019, Dell teamed up with IOTA Foundation to submit the contribution of DCF’s code base to the Linux Foundation, seeding Project Alvarium. A year later, a comprehensive paper was delivered, summarizing the vision behind the project.

However, the plans behind Alvarium were put on hold due to the COVID-19 pandemic, the official wiki says. Still, in October 2020, Dell and Intel delivered a DCF proof of concept intended for use in a privacy-preserving computer vision system for smart cities. By February 2021, Dell’s initial DCF was reengineered with nonproprietary DLT tools, such as IOTA’s Tangle and Streams, to align with the project’s open strategy.

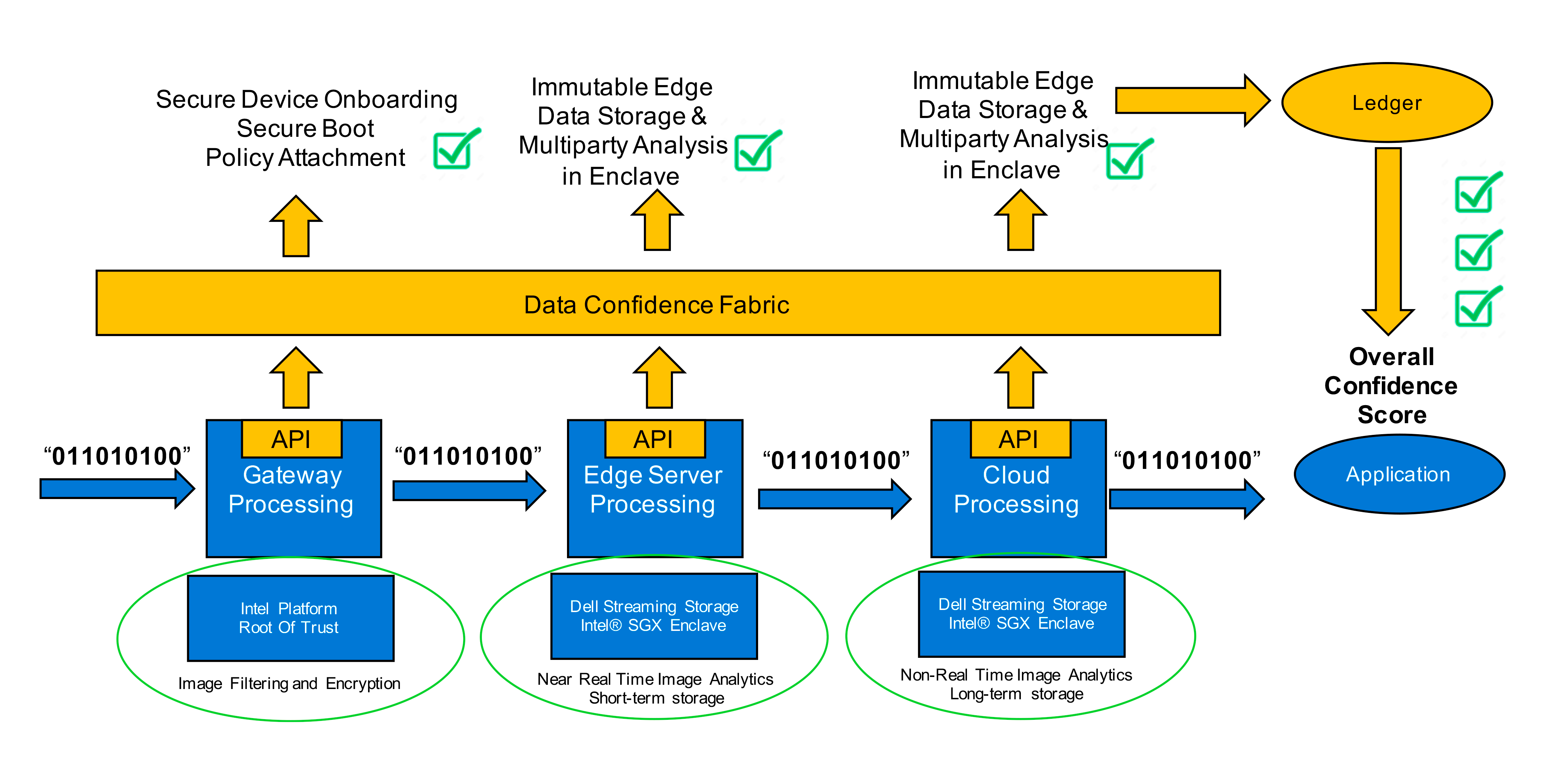

A POC created by Dell and Intel for AI and IoT scenarios (image credit)

A POC created by Dell and Intel for AI and IoT scenarios (image credit)In October 2021, Alvarium found its home at LF Edge—an umbrella organization within the Linux Foundation, working on an open, interoperable framework for edge computing. The project is currently at Stage 1 of development, “At Large.” This means that it has been accepted by the Technical Advisory Council and has a clear alignment with the LF Edge mission statement.

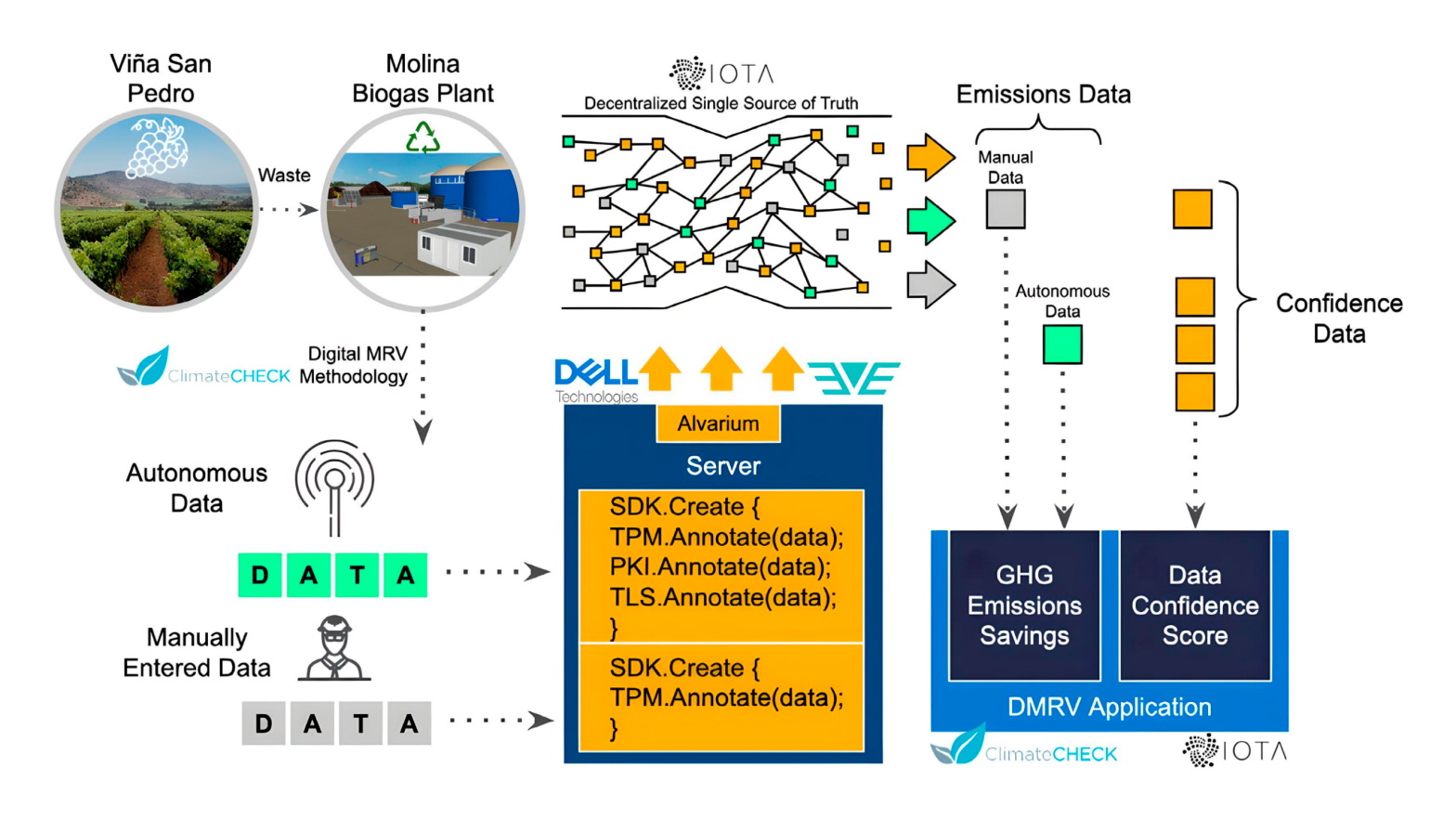

After LF Edge welcomed the project, during 2021–2022, the team focused on a pilot real-life DCF implementation for a biodigester in Molina, Chile. This plant processed organic feedstock to generate energy, with methane reduction data traveling from sensors over a DCF to the server running the Alvarium SDK. Since then, a few webinars and conference sessions shed some light on the details of the implementation.

How Project Alvarium works

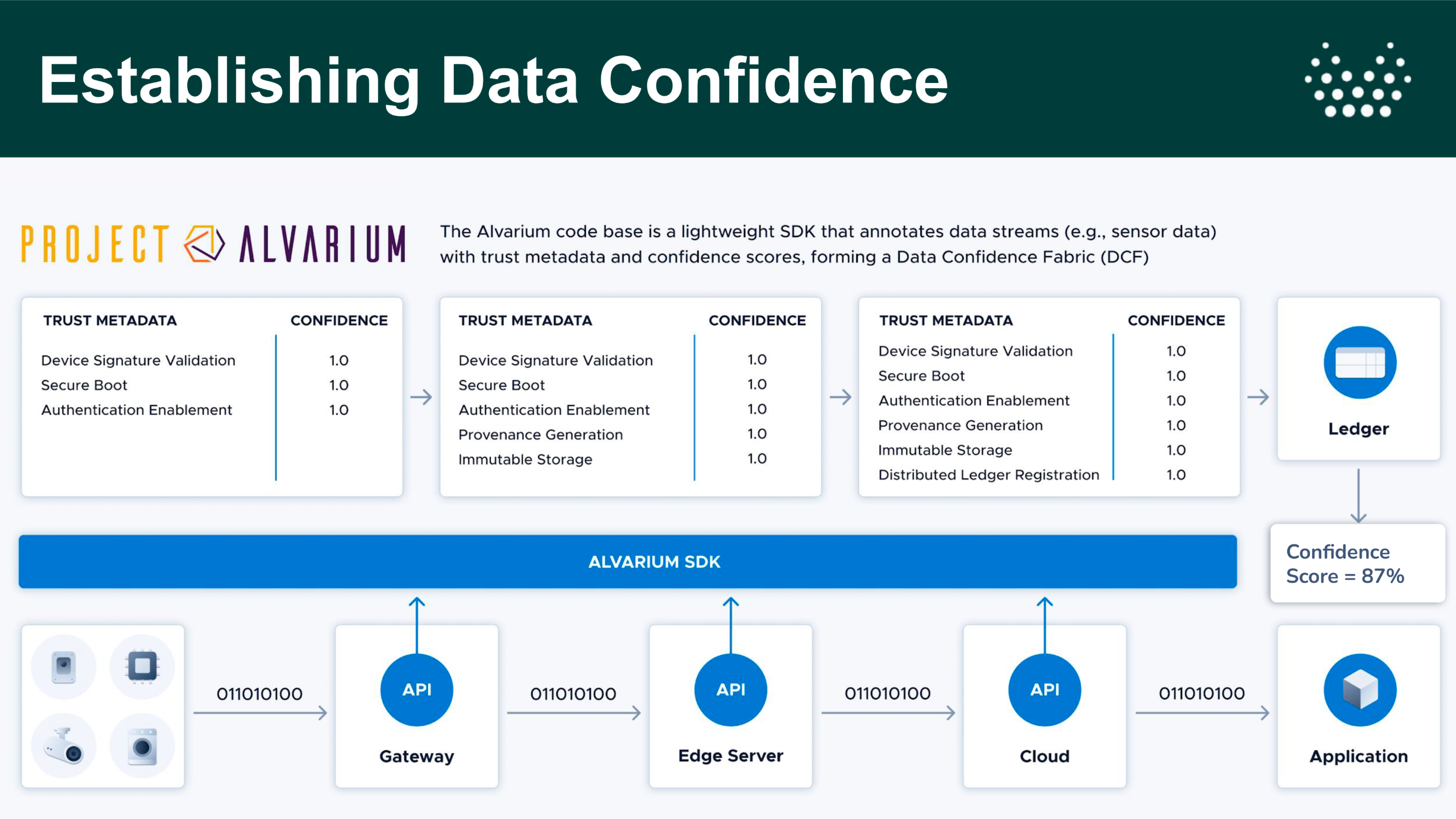

The goal of Project Alvarium is to create an open framework and an SDK for developing a data confidence fabric. In brief, the code helps to annotate IoT/sensor telemetry streams with trust metadata and confidence scores, using a distributed ledger for immutable storage. Users can then compare and prioritize different sources of data based on the trust scores, as well as automate audit and compliance.

In a webinar held in March 2023, Steve Todd explained that the trust scores take into account the source and quality of the data, the security of the data transmission, compliance, reputation of the providers, etc. Alvarium considers the presence of a trusted platform module (TPM), hash, authentication, TLS encryption, signature verification, and immutable storage.

The process of assigning a confidence score (image credit)

The process of assigning a confidence score (image credit)The importance of collaboration over creating an environment of trust is denoted in the name Alvarium, which is the Latin word for “beehive.” The framework can also combine multiple trust fabrics to generate confidence scores based on how the data was collected and processed through different stages and actors. According to Steve, the Alvarium framework is intended to provide an algorithm for calculating confidence scores, rather than the one and only DCF. Instead, each organization is expected to set up a DCF with trust insertion components that fit the company’s specific needs.

“No single entity can own the trust—after all, imagine if one company owned the Internet.” —Steve Todd, Dell

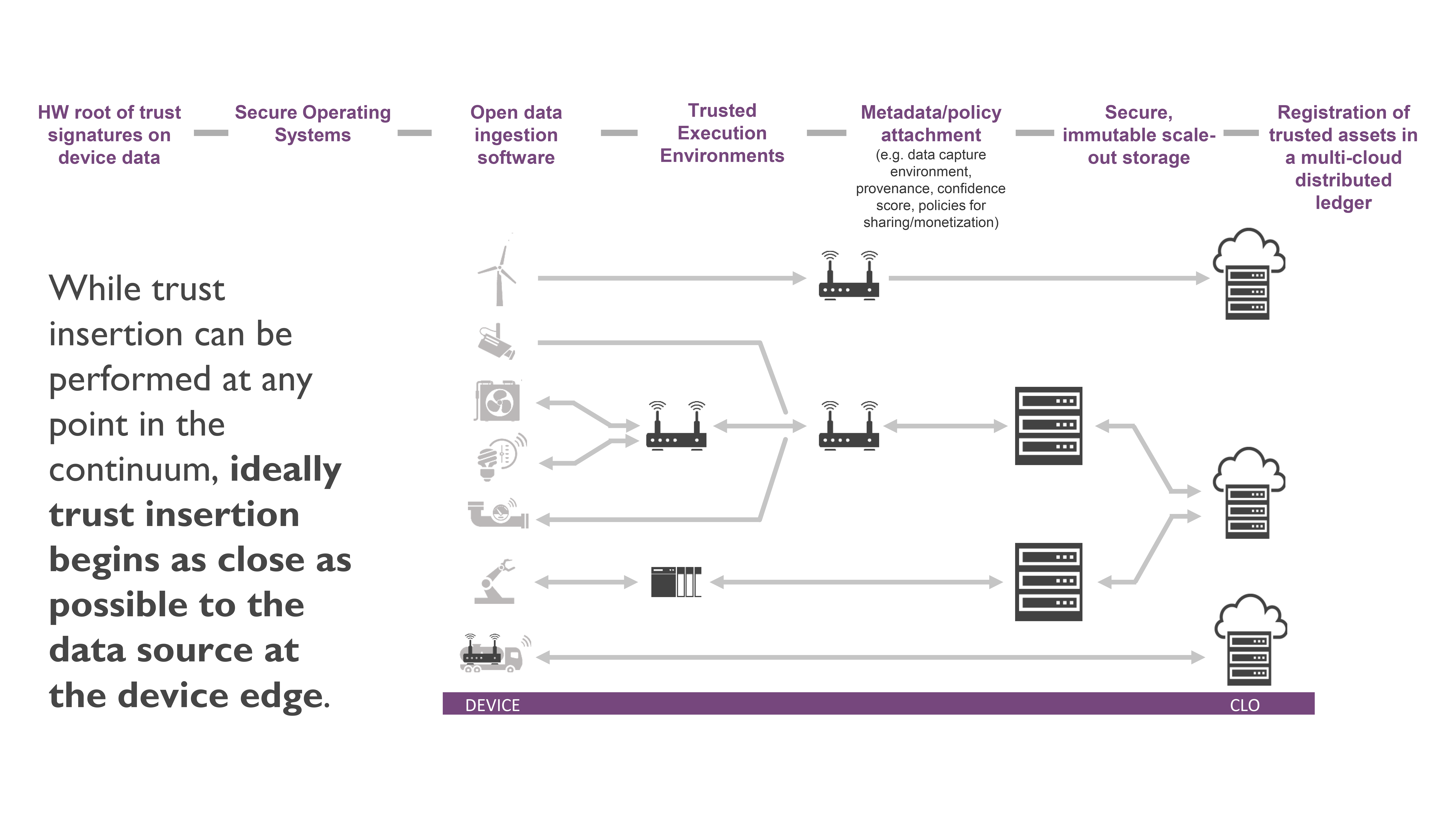

Dell notes that the TPM is essential for establishing a hardware root of trust, as it is responsible for signatures on device data, secure boot, and secure onboarding. The latter refers to performing an initial handshaking protocol between the TPM and the management software that oversees the health of gateway devices. A DCF can also use a different hardware root of trust, such as a trusted execution environment that runs apps in a secure manner.

To protect against tampering, the fabric can verify whether a hash was generated for a piece of data or not and determine which hashing algorithm was used. Furthermore, a DFC can identify and record threat activity and take it into consideration when assigning a confidence score.

Whenever a piece of data passes through an edge node, it calls an API that detects and annotates the operation being performed. Alvarium can check if a device has secure authentication and can request logs describing access attempts. According to Dell and Intel’s white paper, the DCF collects this and other additional metadata and attaches it to the packet. Principal Architect behind Project Alvarium Trevor Conn explained the process in a virtual meeting on March 13, 2023.

“We came up with a concept called trust insertion points, that are essentially places along that path that the data is taking where we can perform these annotations. At the end of the data flow, there’s a measure of trust that’s calculated resulting from the annotations that are captured at each insertion point.” —Trevor Conn, Dell

Example of end-to-end trust insertion points (image credit)

Example of end-to-end trust insertion points (image credit)Trust scores are stored in a distributed ledger (DLT), such as IOTA’s Tangle or Ethereum. This way, the storage provides immutability, transparency, and auditability.

A pilot implementation for a wine producer

During a webinar in March 2023, the project’s team shared the details of a pilot implementation for VSPT Wine Group—one of the largest wine producers in Chile. The group operates the world’s first biogas plant that uses harvest waste as its only fuel to provide the Viña San Pedro winery with 60% of its energy needs. The company used a set of sensors to provide an accurate real-time view of the facility’s carbon footprint. However, creating certified emission reduction statements required a solution that could verify the accuracy of collected data.

Usually, verifying emission statements involves a person coming to the facility and taking manual measurements with a clipboard and a spreadsheet. The process is called measurement, reporting, and verification (MRV)—for this facility, it took 24–48 months. In addition to taking a lot of time, manual MRV processes can leave a significant amount of room for human error. Mathew Yarger—a cofounder of the DigitalMRV platform and an advisor at IOTA—demonstrated a survey estimating an average error rate of 30–40% in the emissions measurements.

Furthermore, a 2019 study revealed that the US fertilizer industry produced 29,000 tons of methane emissions annually. This is over 100x more than their self-reported estimate of 200 tons.

“There is a huge number of errors that come from the auditing practices and the methodologies that are used to quantify the emissions and what’s happening. Because they are heavily analog and it’s not taking data in real time, there is always room for human error…Organizations are reporting the emissions that they think they’re contributing into the atmosphere, and they’re trying to account for those appropriately, but then it comes out that it’s a drastically different number.” —Mathew Yarger, DigitalMRV

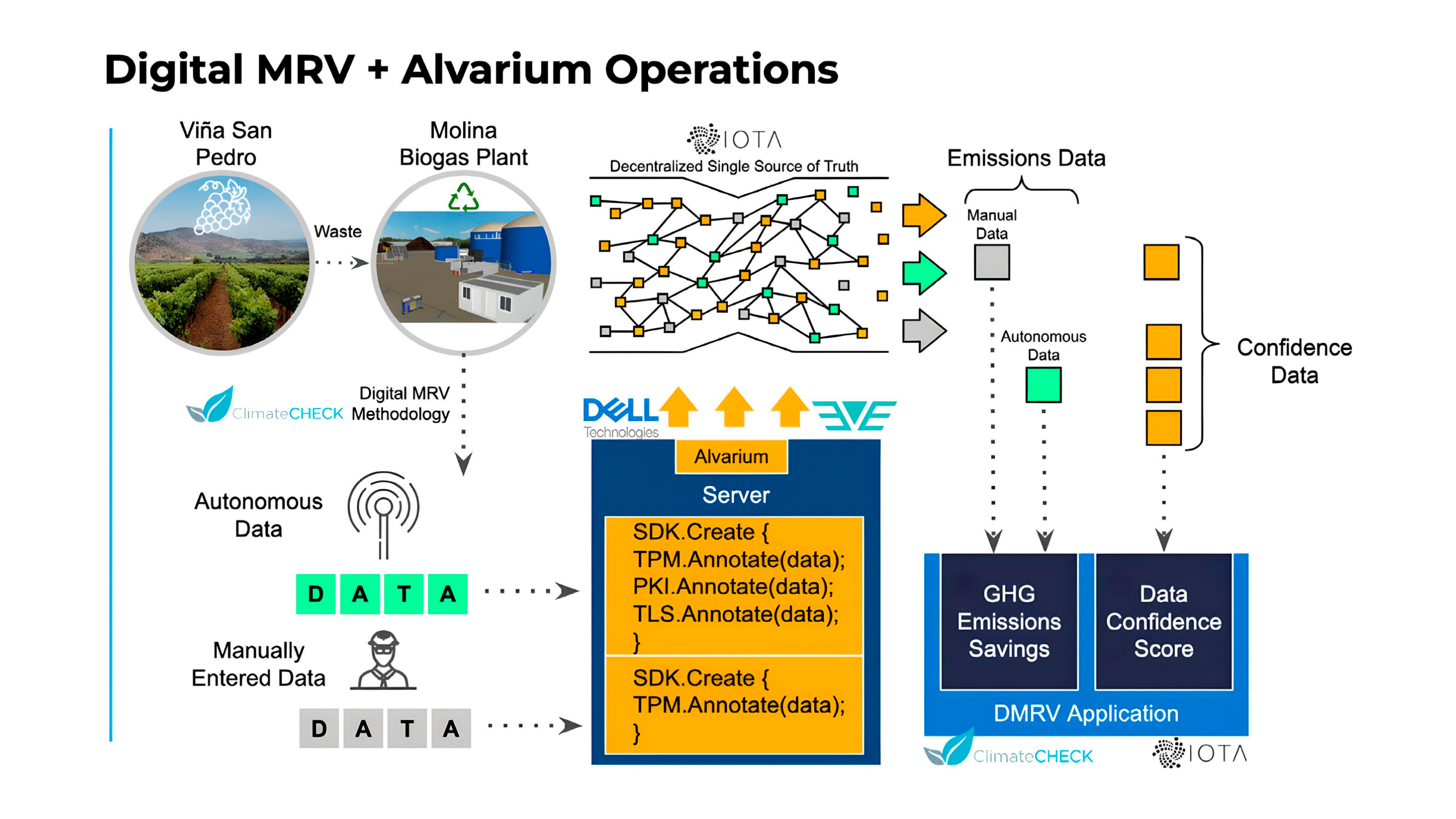

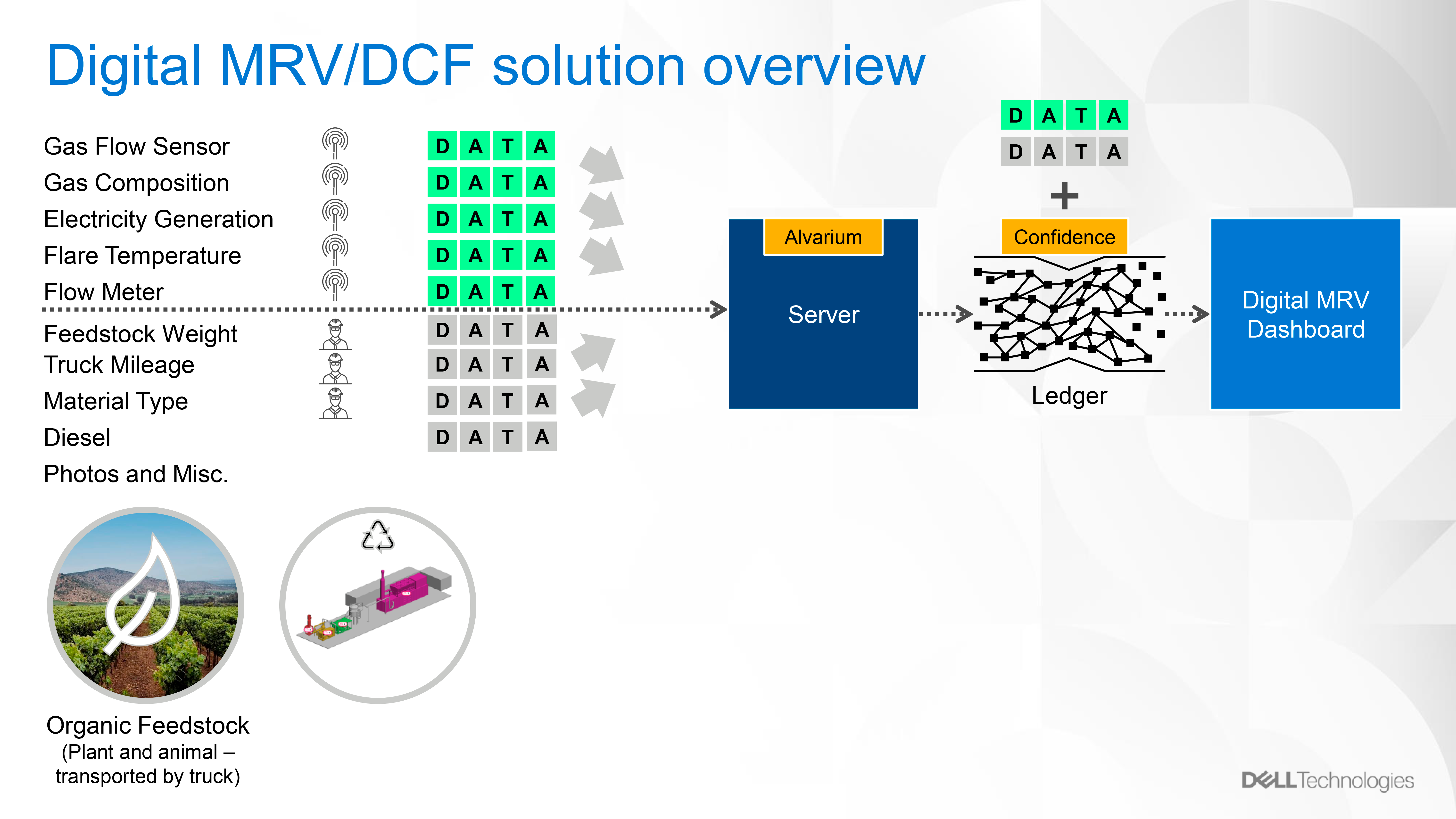

Alvarium-backed data flow for the biogas plant in Molina (image credit)

Alvarium-backed data flow for the biogas plant in Molina (image credit)To address these issues, VSPT Wine group collaborated with Dell, ZEDEDA, IOTA, ClimateCHECK, and DigitalMRV to automate the MRV process and ensure data confidence/compliance. As a result, the new system provided transparency and auditability, while reducing the time required for MRV to just 4–6 weeks. This solution leverages Project Alvarium’s DCF and Project EVE’s ability to bring cloud computing to remote edge locations.

When presenting the results, Kathy Giori of ZEDEDA also emphasized the importance of having security “running all the way down to the bare metal.” A Dell server with EVE installed was shipped to Chile and connected to the network. This served as a control plane, allowing the team to update and manage applications without the need to go on-site. Project Alvarium provided a secure application plane by helping to give trust scores to all data inputs and generate a methane emission reduction statement.

“EVE locks down the bare metal, IOTA secures and routes the data, and Alvarium applies the confidence score so that the auditors know they can trust the source.” —Kathy Giory, ZEDEDA

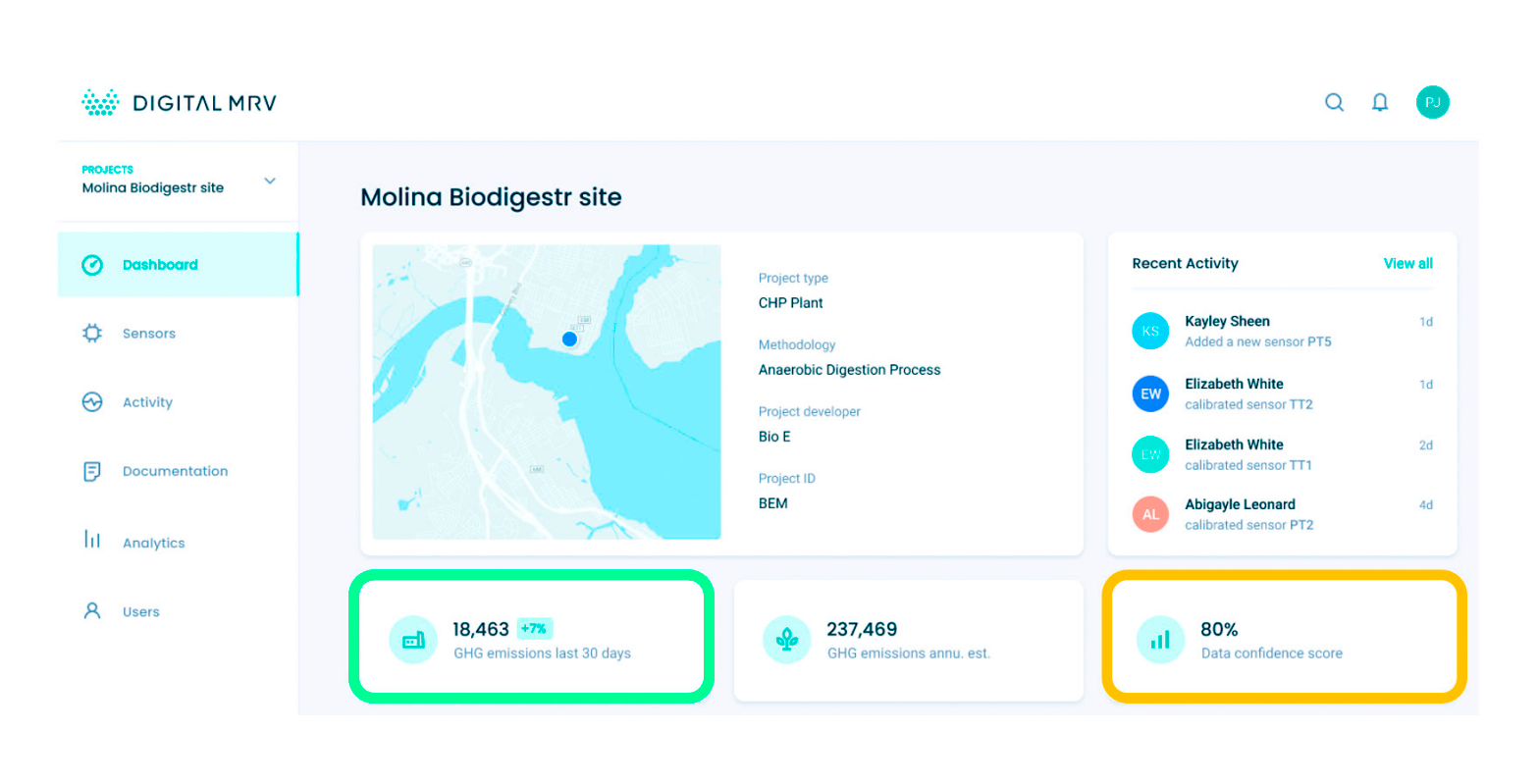

A dashboard showing gathered data along with confidence scores (image credit)

A dashboard showing gathered data along with confidence scores (image credit)Project Alvarium’s DCF built on the IOTA Tangle is used to gather data, run it through Alvarium’s algorithm, and then store the results on a ledger. During the webinar, Steve Todd pointed out that the system takes in data from sensors, as well as manual readings (taken by people). This introduces additional factors that Alvarium has to account for.

When it comes to sensor data, Alvarium is able to record the levels of security as the information travels through the entire system, resulting in an accurate trustworthiness score. As for the data taken by facility personnel, the system can only measure the security of the server where the information was entered. As a result, manual readings tend to have a lower confidence score.

“Adding data to an immutable ledger can increase confidence, because it brings tamper resistance, but it doesn’t quantify how the data is managed along the way. If you’re taking bad data and putting it into a ledger it’s a little more secure but it’s still bad data.” —Mathew Yarger, DigitalMRV

The types of data gathered by the Digital MRV system (image credit)

The types of data gathered by the Digital MRV system (image credit)Future plans

According to a wiki at LF Edge, in 2023, the project’s team aims to focus on growing adoption, partner engagement, and making Alvarium more flexible and applicable to use cases beyond the IoT.

Aligning with this strategy, on February 7, 2023, Dell joined the Hedera Governing Council. The organization stands behind Hedera, an open-source public ledger, which could help Alvarium with contract automation, ESG reporting, and other R&D activities, the announcement says.

Currently, the development of Alvarium is governed by a Technical Steering Committee (TSC), which was formed after the project was admitted to LF Edge in 2021. The TSC meets biweekly to discuss the roadmap, architecture, potential partnerships, etc.

During a virtual meeting on March 13, 2023, the TSC discussed the issue of reducing duplication in trust scores. Right now, device-related information (the presence of a TPM, secure boot, etc.) is annotated for every piece of data. However, because these hardware factors cannot change between two points of data capture, the information is redundant. As such, Trevor Conn proposed associating such data with the device profile of confidence instead.

Another proposed improvement involved operationalizing the confidence score of a software artifact resulting from a CI/CD pipeline. Here, Trevor Conn mentioned using a software bill of materials to ensure that a given build was created successfully and that there were no exploits in the pipeline. In the future, this could help orchestrators to make decisions about whether or not to deploy or use a particular piece of software. For example, companies can create a rule to block any workload that has a trust score lower than 90%.

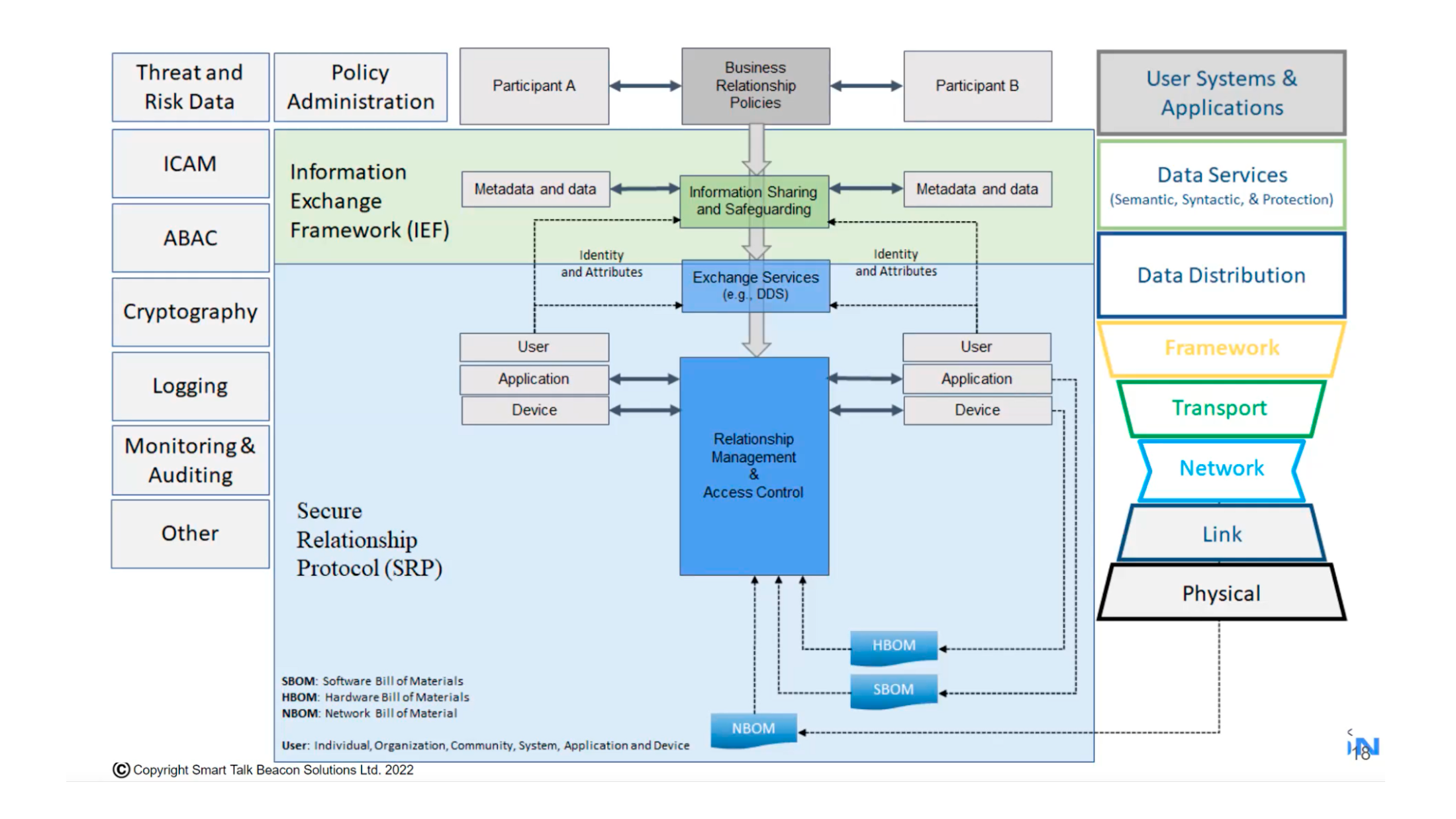

During the meeting on March 27, 2023, the TSC discussed Secure Relationship Protocol Network Operating System (SRPNetOS). The project uses bills of materials, which contain a list of all the hardware and software components used to build a particular product, application, or network. A DCF could use this information to check if any of the components have unpatched vulnerabilities and assign a more accurate trust score.

Example architecture of a policy-driven network (image credit)

Example architecture of a policy-driven network (image credit)Those who want to learn more about Project Alvarium, can find its code in this GitHub repository. Dell’s technical paper explains the vision behind Alvarium, and there is also an overview of the project in the wiki. If you want to follow the project more closely, check out its mail lists for TSC-related and other items, as well as official Twitter and LinkedIn pages. There is also an #alvarium channel in the LF Edge Slack workspace.

Frequently asked questions (FAQ)

-

Why is there an issue of trust with IoT data?

Data that moves from an IoT sensor to the end app passes though other devices and systems, and each one of those could be used to tamper with the information. Furthermore, an attacker can use advanced AI tools to generate extremely realistic fake data that can easily fool a human.

-

What’s wrong with a zero-trust approach for IoT?

A zero-trust approach means treating all data as untrustworthy until proven otherwise. This is extremely difficult to scale for an IoT system with thousands of devices sending packets every few seconds.

-

What is a data confidence fabric (DCF)?

A DCF is a loosely coupled collection of trust insertion technologies that measure how trustworthy data is as information moves from devices to apps. The system assigns confidence scores based on the presence of a TPM (trusted platform module), secure boot, TLS encryption, signature verification, hash, etc.

-

Which companies work on Project Alvarium?

Project Alvarium was developed internally at Dell and donated to the Linux Foundation. Now, in addition to Dell, the project is supported by a number of industry leaders, including IOTA Foundation, Intel, Arm, IBM, VMware, OSIsoft, Unisys, etc. The team behind Alvarium also collaborated with ZEDEDA, ClimateCHECK, and DigitalMRV when creating the first pilot implementation for VSPT Wine Group.

Want details? Watch the videos!

In this March 2023 webinar, Kathy Giori, Steve Todd, and Mathew Yarger presented the pilot implementation of Project Alvarium for a biodigestion plant in Chile.

During another webinar (August 2022), Kathy Giori went into more technical details on how the biodigestion plant works, as well as the open-source IoT technologies at play.

Further reading

- Rule-Based Stream Data Processing at the Edge with eKuiper

- Scaling Industrial IoT in Manufacturing: Challenges and Guidelines

- The Ins and Outs of the IoT for Transportation and Logistics

About the experts

Steve Todd is Fellow and VP of Data Innovation and Strategy in the Dell Technologies Office of the CTO. He is a long-time inventor in HiTech, having filed over 400 patent applications with the USPTO. Steve’s innovations and inventions have generated tens of billions of dollars in global customer purchases. His current focus is the trustworthy processing of edge data. As a cofounder of Project Alvarium, Steve is driving the exploration of distributed ledger technology with partners such as IOTA and Hedera. He earned Bachelor’s and Master’s Degrees in Computer Science from the University of New Hampshire.

Trevor Conn is Distinguished Engineer at Dell Technologies. He is currently working as Research Lead for Digital Twins in the Dell Office of the CTO and assists in the design and delivery of distributed edge-to-cloud solutions. Trevor has extensive experience with .NET (C#), ASP.NET / MVC / WebAPI, Go, MQTT, RabbitMQ, Apache Kafka, SQL Server, MongoDB, NServiceBus, and ElasticSearch. Being a cofounder of Project Alvarium, he currently works as its principal architect and engineer.

Mathew Yarger is Cofounder of DigitalMRV and Advisor at IOTA. He has a diverse computer science and network security background, as well as experience with blockchain, DLT, and Distributed Systems Theory. Mathew has expertise in enabling flexible and privacy-centric data transfer for smart cities, critical infrastructure, environmental and energy systems, as well as autonomous vehicles. At the IOTA Foundation, he worked in leadership and engineering positions connected with sustainability, smart cities, smart mobility, and security. He was Director of Cybersecurity at Geometric Energy Corporation, and Digital Forensic Examiner at the DoD Cyber Crime Center Cyber Forensics Lab while working for General Dynamics Mission Systems. Before that, Mathew served as Cybersecurity Analyst in the US Army.

Kathy Giori is Director of Product Engineering at ZEDEDA and CEO at Tricyrcle. Kathy has a background in open-source collaboration, embedded Linux platform strategy, and securely managed IoT product definition, integration, and testing. At Mozilla, she was Senior Product Manager and Staff Developer Evangelist. Kathy also served as Vice President of Operations at Arduino, working on open-source collaboration and embedded Linux platform strategy. She also holds an MSEE from Stanford University and a BSEE from the University of Minnesota.

Subscribe to new posts

Contact us and get a quote within 24 hours